Note: for the actual implementation details on my experiments around SDD, check my demo walkthrough doc.

If you’ve spent any time with AI coding agents, you know the thrill. You ask for an expense tracker, it generates one. You ask for a menu feature, it adds it. You point out a bug, it proposes a fix. It feels like magic—until it doesn’t.

Inevitably, the model starts hallucinating features, forgetting earlier decisions, or creating code paths you never asked for. You try to rein it in with a custom instructions file , but those quickly fall out of date. Before long, you’re wrestling with the very agent that was supposed to save you time.

Spec-Driven Development (SDD) is the antidote to this chaos.

Rather than letting code (and an over-eager LLM) dictate direction, SDD gives us structure, guardrails, and a development rhythm that creates clarity instead of drift.

What Is Spec-Driven Development? (SDD)

Specifications can make or break any project. For example, a few years ago I was in charge of a development team tasked with producing a product catalog Web API. Right on schedule, two weeks before we were due to roll out the resident silverback software architect dropped a bomb on us – a late breaking requirement that would instantly have spiraled the project’s complexity by a factor of ten, and made us months late in delivery. Having a strict written functional requirement I could point to (in this case a response time under 0.2 seconds), and a delivery date in the near term, acted as a magic shield to keep us on track.

Specs can be both a shield and a trap. As I wrote in my book, it’s the nonfunctional requirements- which often aren’t understood well by the project team or are implicit – that can delay or sink a software project. Database standards, authorization requirements and security standards, lengthy and late-breaking deployment policies – the fog of the unknown has us in its grip.

Spec-Driven Development (SDD) is meant to address this gap. Instead of code leading the way and the understood project goals and context falling hopelessly behind – the spec drives everything. Implementation, checklists, and task breakdowns are no longer vague.

The promise is that this uses AI capabilities and agentic development the right way. It amplifies a developers’ effectiveness by automating or handing off repetitive work that an agent can often do much more quickly and effectively – leaving us to do the actual creative work humans do best; refactoring, directing and steering code development, critical thinking around feature best paths.

I like the writeup from the GH blog by one of the speckit coauthors, Den:

Instead of coding first and writing docs later, in spec-driven development, you start with a (you guessed it) spec. This is a contract for how your code should behave and becomes the source of truth your tools and AI agents use to generate, test, and validate code. The result is less guesswork, fewer surprises, and higher-quality code.

Unlike the vibe coding videos I’ve seen, which are mostly greenfield and very POC / MVP level in complexity – I think SDD has the potential to be ubiquitous. It could fit almost anywhere, even with very complex and monolithic app structures. It could help with large existing legacy applications. And it enforces development standards that can prevent a lot of wasted time and effort.

Let’s start with a quick overview of the process.

Specify, Plan, Tasks, Implement: A Four Step Dance

Software development with SDD follows this lifecycle:

Instead of jumping into coding (or vibe-coding your way into a corner), you follow a four-step loop:

- 1. /specify — Describe what you want: High-level aims, user value, definitions of success. No tech stack. No architecture.

- 2. /plan — Decide how to build it. Architecture, constraints, dependencies, standards, security rules, and any nonfunctional requirements.

- 3. /tasks — Break it down. Small, testable, atomic units of work that the agent can execute safely.

- 4. /implement — Generate and validate the code. Here TDD is mandated. Tests first, then implementation, then refinement.

Starting with a constitution is a game changer because – as the orig specs state – they’re immutable. Our implementation path might change, and we can even change the LLM of choice – but these core principles remain constant.. Adding new features shouldn’t render our older system design work invalid.

Step 1: / specify

You start with a constitution: the unchanging principles and standards for your project. It’s the backbone of everything that comes after.

GitHub’s Spec Kit can generate a detailed spec file from even a one-line input like “I need a photo album site that allows me to drop and share photos with friends.” It even marks uncertainties with [NEEDS CLARIFICATION], essentially flagging the traps LLMs usually fall into. And though this is optional – I would highly recommend running /clarify to address each ambiguity, refining your spec until it reflects exactly what you want the system to do.

By the end, you’ve got:

- A shared understanding of what success looks like

- A great first draft of what user stories, acceptance criteria, and feature requirements you have for the project.

- Clear user stories

- Acceptance criteria

- Detailed requirements

- Edge cases you probably wouldn’t have thought of

Step 2: /plan

We just finished our first stab at “why” – this is where the “how” comes in. /plan is where we feed the LLM our tech choices, constraints, standards, and organizational rules.

Backend in Node? React front end? Performance budgets? Security controls? Deployment quirks? Legacy interactions? All of it goes here. As Den notes in the GitHub blog:

Specs and plans become the home for security requirements, design system rules, compliance constraints, and integration details that are usually scattered across wikis, Slack threads, or someone’s brain.

Spec Kit turns all of this into:

- Architecture breakdowns

- Data models

- Test scenarios

- Quickstart documentation

- A clean folder structure

- Research notes

- Multiple plan options if you request them

Look at that beautiful list of functionality… Including a very nifty app structure tree. OMG!

Step 3: /tasks

The third phase is where you ask the LLM to slice the plan we just created into bite-sized tasks—small enough to be safe, testable, and implementable without hallucination. It also flags “priority” tasks and provides a checklist view for the entire project.

This creates something rare: truly atomic, reviewable, deterministic units of work.

The /analyze command is especially powerful—it has the agent audit its own plan to surface hidden risks or missing pieces.

At first glance – this is a nearly overwhelming amount of work:

This is a lot to go through!! Where to start? Thankfully it tells me which ones are important:

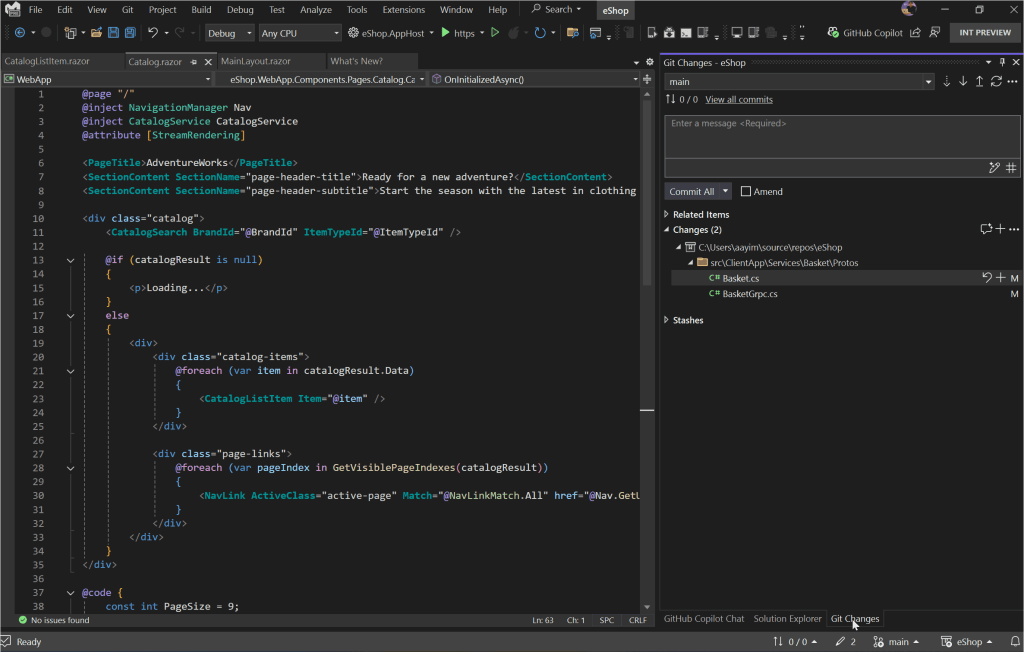

Step 4: /implement

Now the LLM finally writes code. But instead of working from guesswork and half-remembered context, the agent is now writing code from:

- A clarified spec

- A vetted plan

- A task list

- Test requirements

- An architectural contract

- Immutable principles

You can implement by phase or by task range. Smaller ranges work better for large projects (context windows get spicy otherwise).

The best part? Updating a feature is now simple: change the spec, regenerate the plan, regenerate tasks, re-implement. All of the heavy lifting that used to discourage change is gone.

Summing Things Up

The real magic of SDD isn’t the commands—it’s the mindset:

- Specs are living, executable artifacts

- Requirements and architecture stay fresh

- Tests are generated before code

- LLMs stop improvising

- Creativity shifts from plumbing to design

- Consistency is enforced, not hoped for

- Documentation emerges automatically

- Adding features becomes a natural loop

- Legacy modernization becomes sane again

As AWS and GitHub both point out, vibe coding is intoxicating but fragile. It struggles with large codebases, complex tasks, missing context, and unspoken decisions. SDD fixes the brittleness without killing the creativity.

It keeps the fun of vibe coding, but adds discipline, traceability, and clarity—like pairing with a brilliant junior dev who follows instructions with perfect literalness.

I do think Spec Driven Development will be changing very rapidly over the next few years. But it definitely is here to stay! Its in line with how AI coding agents are meant to work, and it allows us to focus on the creative / business implications of what we’re writing – a force multiplier for the innovative developer.

For Future Research

I already mentioned more work to come on having the coding agent generate different approaches for comparison; also what the implementation might look like with different models besides Claude Sonnet.

Some interesting statements by Den in that GH blog article: “Feature work on existing systems, where he calls out that advanced context engineering practices might be needed.” What are these exactly?

A second point follows right after:

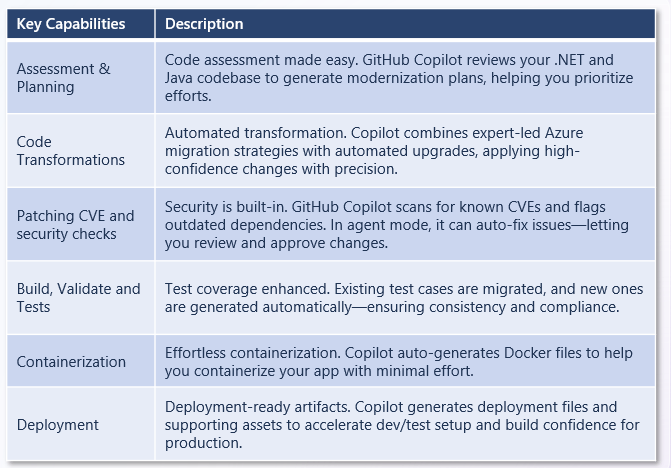

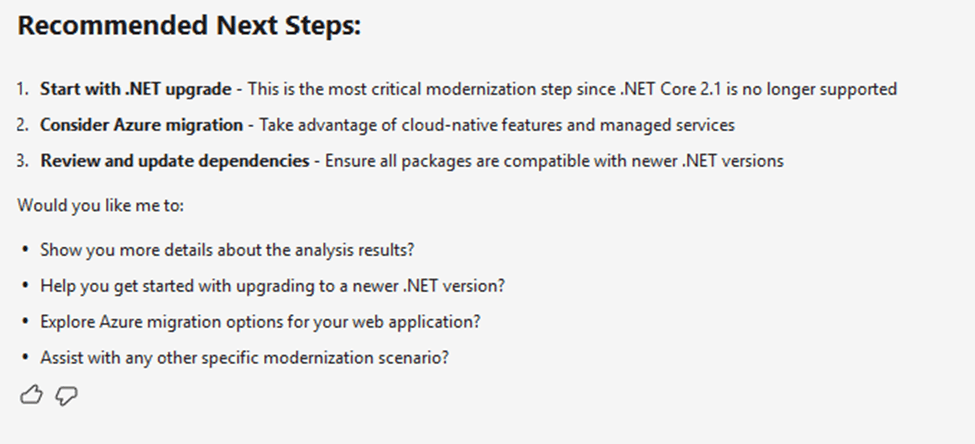

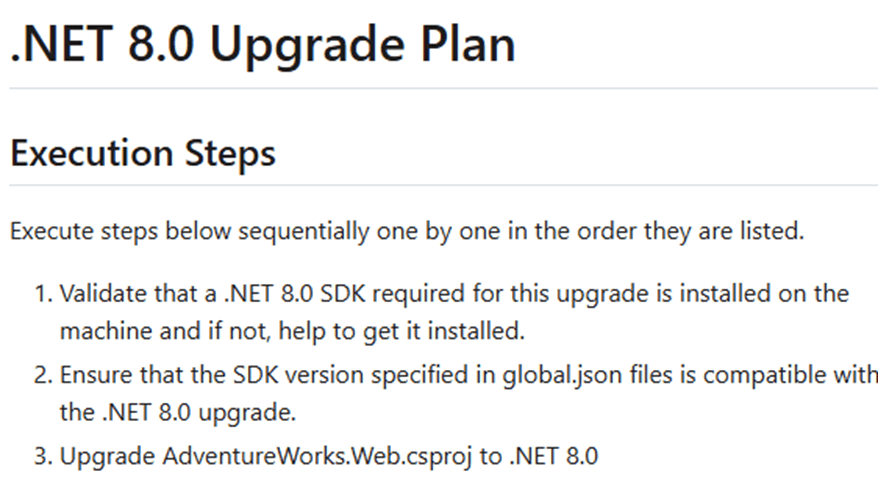

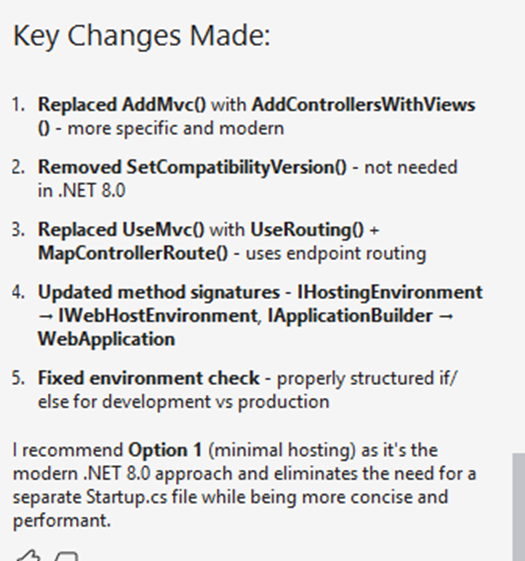

“Legacy modernization: When you need to rebuild a legacy system, the original intent is often lost to time. With the spec-driven development process offered in Spec Kit, you can capture the essential business logic in a modern spec, design a fresh architecture in the plan, and then let the AI rebuild the system from the ground up, without carrying forward inherited technical debt.”

I’d like to see this! We need more videos demonstrating splicing on new features to a large existing codebase.

References

- The source repo and where you should start – SDD with Spec Kit (https://github.com/github/spec-kit)

- Video Goodness: the 40 min overview video from Den Delimarsky – The ONLY guide you’ll need for GitHub Spec Kit

- See the detailed process walkthrough here.

- Background founding principles, a must read… even if lengthy. It all comes from this. For example, the Development Philosophy section at the end clarifies why testing and SDD are PB&J, and how these guiding principles help move us away from monolithic big balls of mud.

- I liked this video very much… because it walked through the lifecycle below very clearly. Good sample prompts as well.

- The Uncommon Engineer spends a half day experimenting with SDD. He found it a frustrating experiment. Some of his conclusions: specifications can actually lead to procrastination (user feedback is the only thing that matters), and start embarrassingly simple with your specs. Testing specification compliance is NOT the same thing as testing user value…

- Den Delimarsky talks vibe coding and SDD on the GitHub blog. “We treat coding agents like search engines when we should be treating them more like literal-minded pair programmers. They excel at pattern recognition but still need unambiguous instructions.”

- Dr Werner Vogels, AWS re:Invent 2025 keynote. About 40 minutes in we’re talking about SDD – at 46 minutes in – Kiro and function driven development: “The best way to learn is to fail and be gently corrected. You can study grammar all you like – but really learning is stumbling into a conversation and somebody helps you to get it right. Software works the same way. You can read documentation endlessly – but it is the failed builds and the broken assumptions that really teaches you how a system behaves.”

- Tomas Vesely from GH explores writing a Go app using SDD. Interestingly, his compilation was slowing over time.